SpeedCurve Lighthouse vs other Lighthouse

Here's why your Lighthouse scores in SpeedCurve might be different from the scores you get elsewhere.

There are a number of reasons why your Lighthouse scores in SpeedCurve might be different from the scores you see in other tools, such as Page Speed Insights (PSI). Here are a few common reasons, along with our recommendation not to focus too much on getting your scores to "match".

Different Lighthouse versions

While PageSpeed sometimes runs the same version of Lighthouse that we do, from time to time they may be out of sync with us. (Note that we typically run the latest release.)

TTI, TBT and different test environments

The performance score is strongly influenced by Time to Interactive (TTI) and Total Blocking Time (TBT), which can be quite different depending on the test environment and runtime settings. The Lighthouse team have written some great background on what can cause variability in your scores.

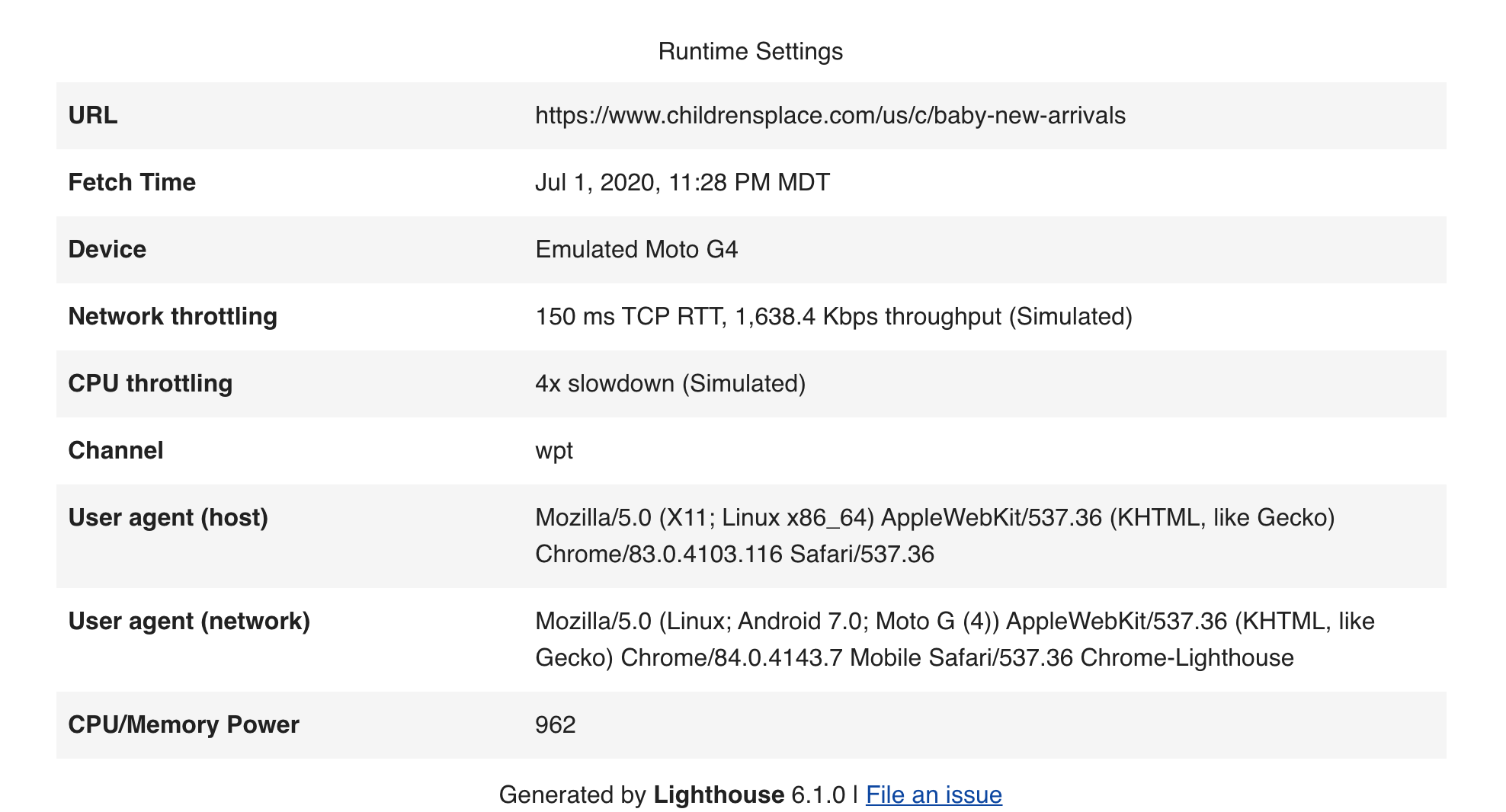

Runtime settings

At the bottom of the Lighthouse report, you will see the runtime settings used for the Lighthouse test. SpeedCurve's Lighthouse test runs now use the official throttling settings for mobile and desktop. We do not override settings based on the test environment and your settings may be different when running outside of SpeedCurve.

Test hardware and location

In addition to runtime settings potentially being different, the test servers themselves may be very different. This is true if you are running your own Lighthouse environment and also true if using the Page Speed Insights API. PSI uses different servers based on your physical location (presumably the closest or most optimal server). SpeedCurve will run Lighthouse tests from specified locations based on your Site Settings.

SpeedCurve runs Lighthouse separately to the main test

The Lighthouse report doesn't reuse the same page load that SpeedCurve does. It's a separate page load done at the end of the SpeedCurve test so the metrics numbers will be different.

Lighthouse is run only once, it doesn't use the Set how many times each URL should be loaded setting.

You can't directly compare the metrics like you see in the SpeedCurve UI like "Time To Interactive", with the metrics in the Lighthouse report. Depending on the nature of your page and the network throttling in your test settings, there could be a some variation in each page load.

Lighthouse does not support SpeedCurve Synthetic scripts

For this reason, multistep scripts, authentication scripts, or scripts setting cookies may have very different results due to broken navigation.

Our recommendation: Don't overly focus on wondering why your metrics don't "match"

We've tried to make it easy to compare the recommendations and metrics coming from those different sources. But we recommend that you don't overly focus on wondering why your metrics don't "match". The idea is to have them all in one place so you can compare and decide which to focus on.

You shouldn't really consider any of these metrics as "reality" – that's whatRUM is for. Synthetic testing is more about establishing a baseline in a clean and stable environment, and then improving those metrics by X% over time.

Updated 8 months ago