Bookmark and compare

Generate side-by-side comparisons that let you not only spot regressions, but easily identify what caused them.

A huge benefit of tracking web performance over time is the ability to see trends and compare metrics.

With SpeedCurve, you can bookmark and compare different data points in your monitoring history, generating side-by-side comparisons that let you not only spot regressions, but easily identify what caused them:

- Compare the same page at different points in time

- Compare two versions of the same page – for example, one with ads and one without

- Understand which metrics got better or worse

- Identify which common requests got bigger/smaller or slower/faster

- Spot any new or unique requests – such as JavaScript and images – and see their impact on performance

How to bookmark tests or sessions

(If you're more into watching than reading, scroll down to the bottom of this article and check out our explainer video.)

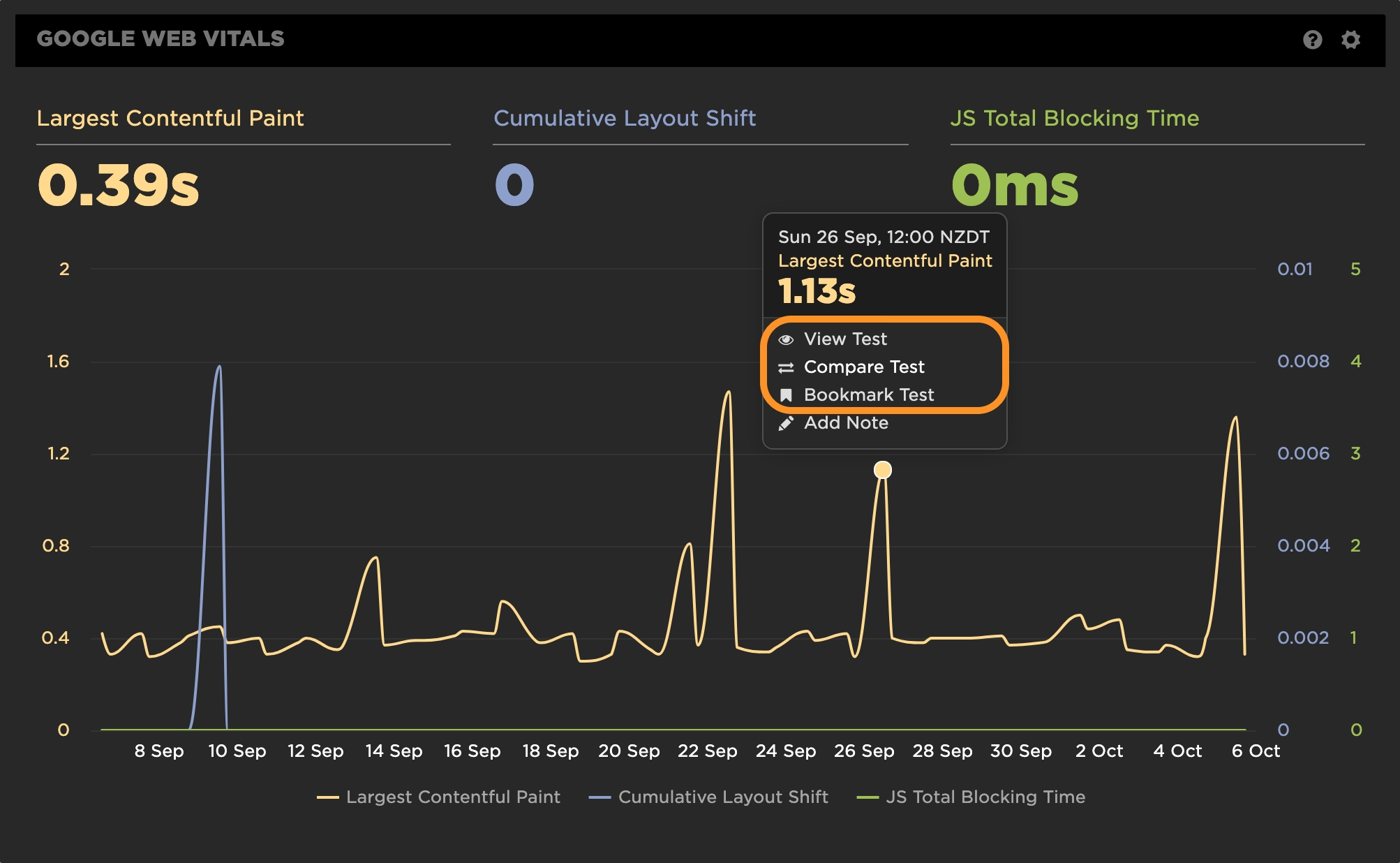

1. To get started, hover over data point in any time series chart and click.

Bookmark a synthetic test:

Within the popup, you can click "View Test" to see the full median test result for that test run. Or, if you already know that you want to bookmark that test for comparison, you can simply hit "Bookmark Test" within the popup.

Bookmarking a test from a chart

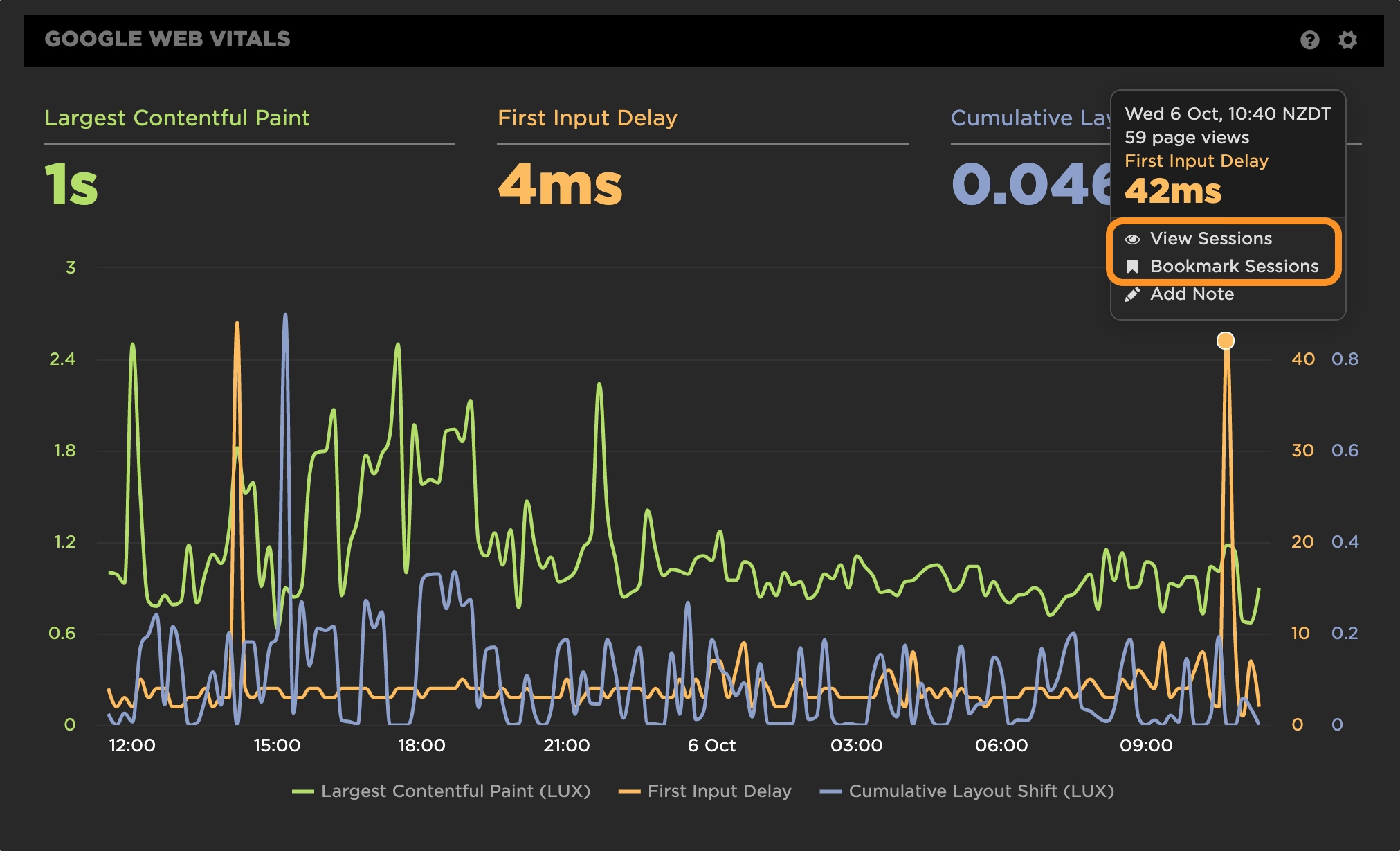

Bookmark RUM sessions:

Within the popup for a RUM time series chart, you can click on "View Sessions" if you want to explore that dataset. Or, if you want to go ahead and save them now click on "Bookmark Sessions".

Bookmarking a group of sessions from a chart

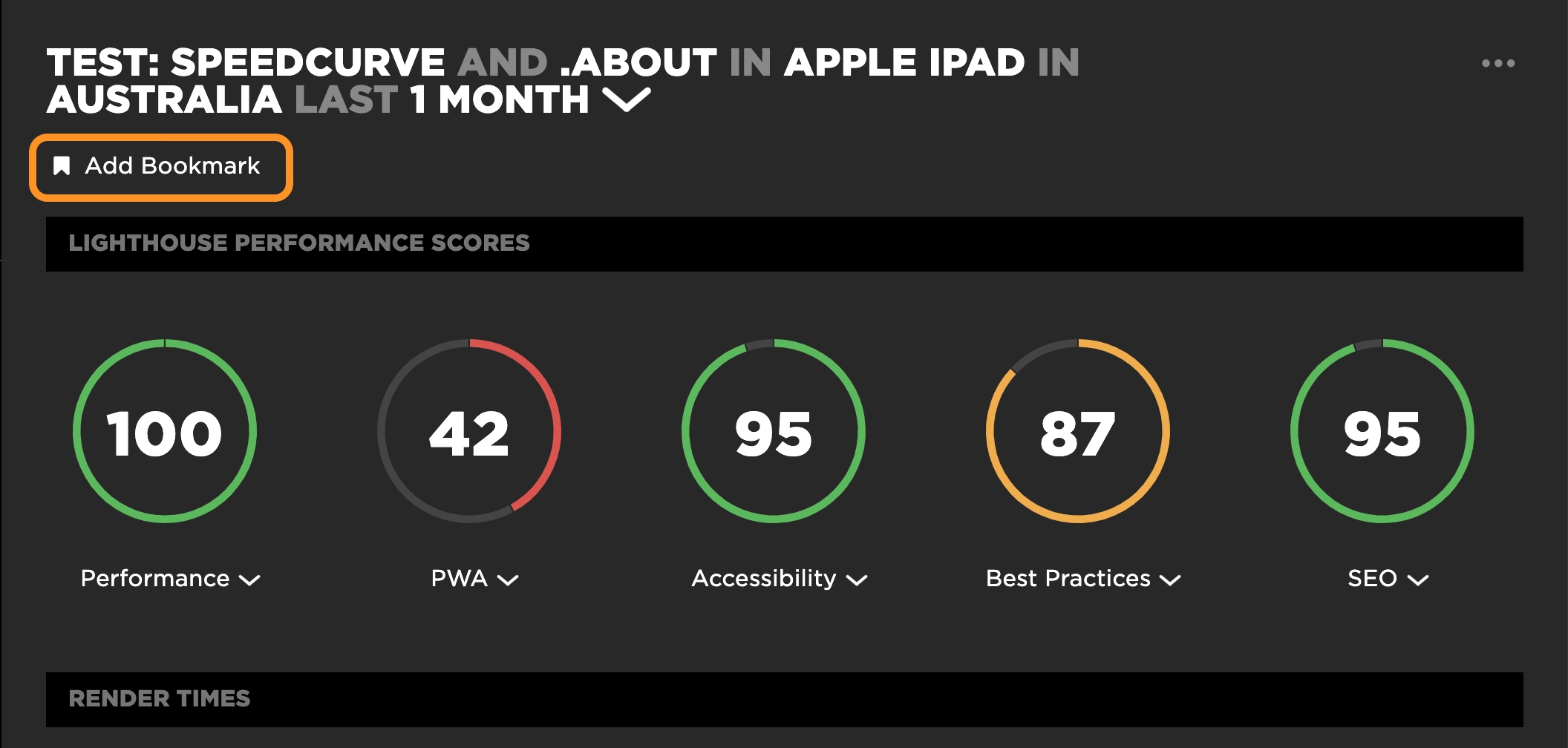

2. If you've drilled down into a Synthetic test result or the RUM Sessions dashboard and confirmed that you want to bookmark it for comparison, all you need to do is click the "Add Bookmark" link in the top-left corner of the window.

Bookmarking a test

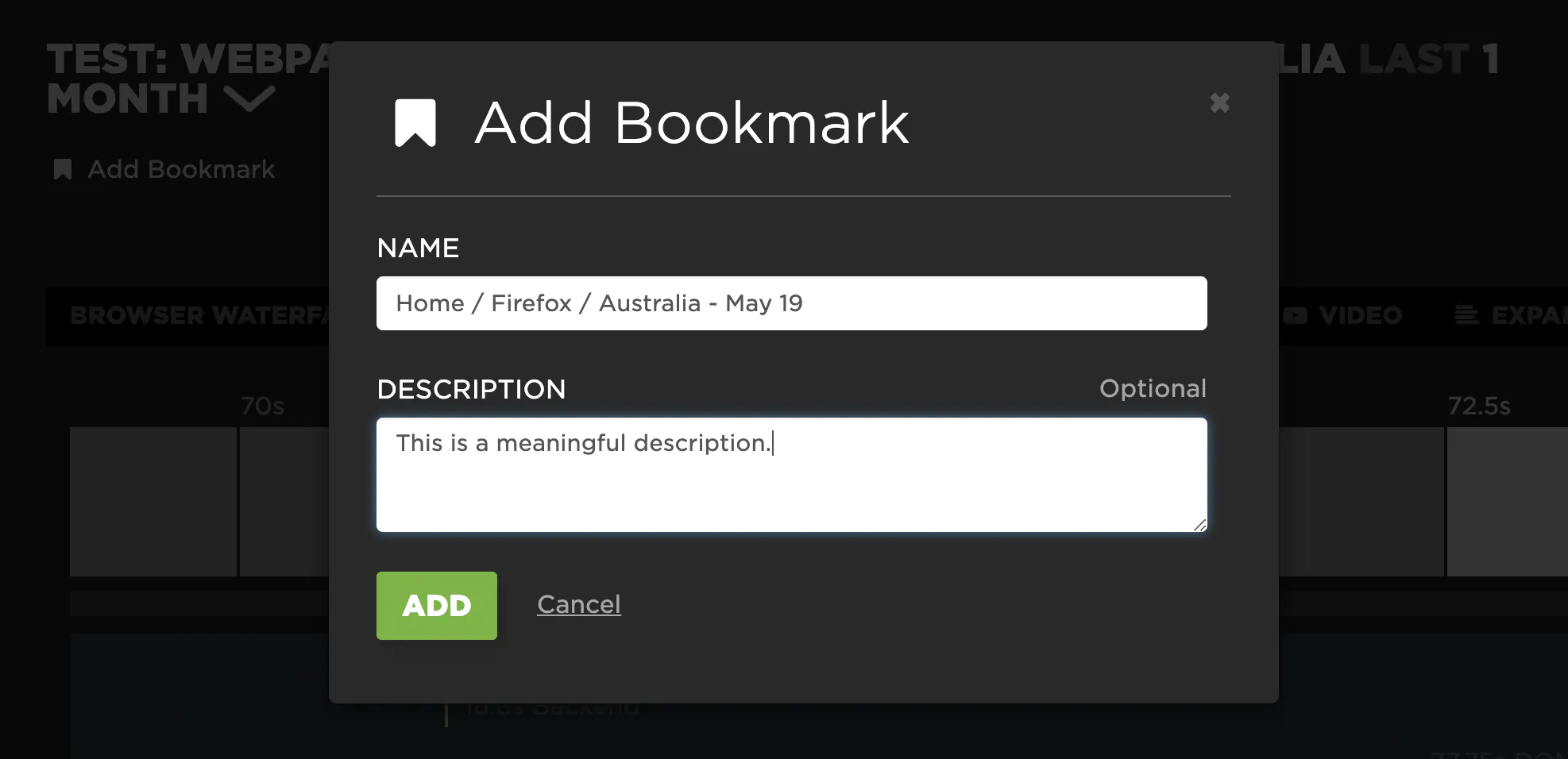

3. Then you have the option of giving the bookmark a meaningful name and/or description.

Naming the bookmark

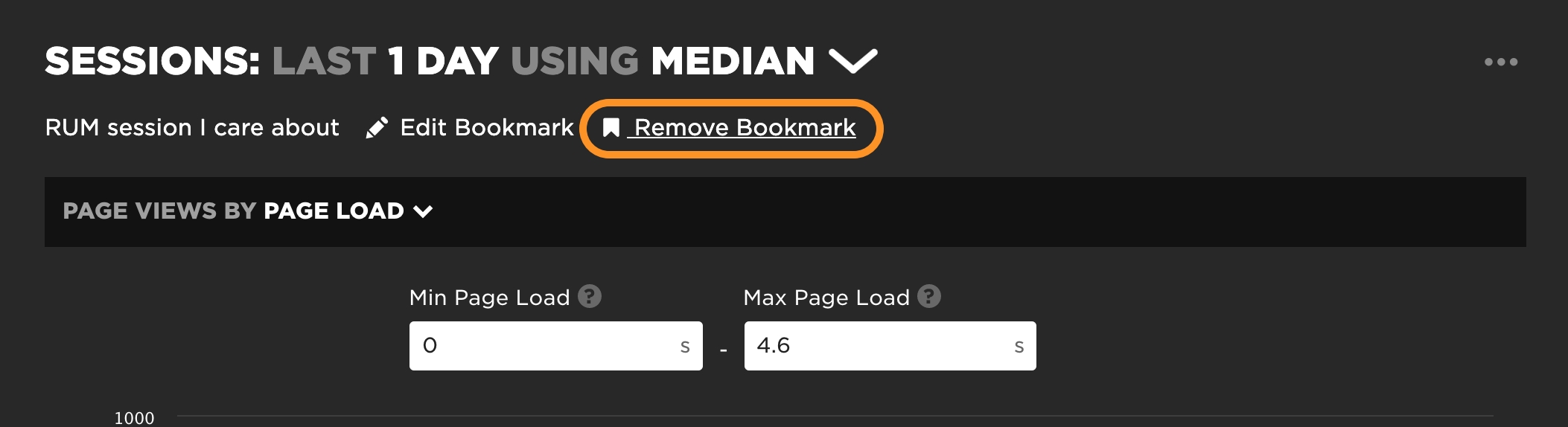

If you change your mind, just click "Remove Bookmark".

Removing a bookmark

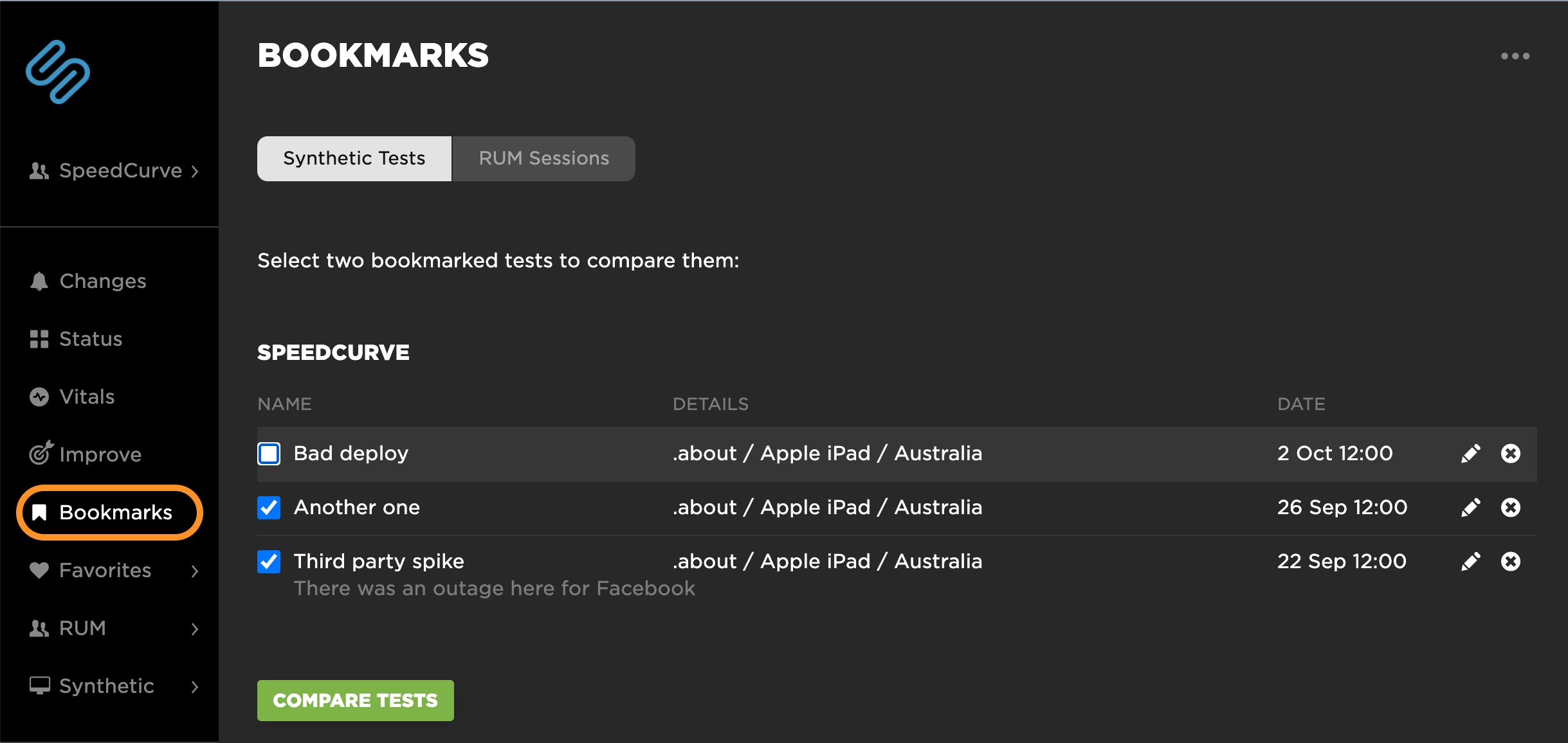

4. Clicking on "Bookmarks" from the main navigation shows you a list of all the tests or RUM sessions you've bookmarked – you can bookmark multiple tests and sessions, but you can only compare two synthetic tests at a time.

Navigating to the Bookmarks dashboard

How to bookmark and compare synthetic tests

Now let's walk through how to run a comparison and analysis using a real-world example from our "Top Sites" demo account.

1. In this demo account, we track a number of leading retail, travel, and media sites. I've randomly selected CNN for the purpose of this post.

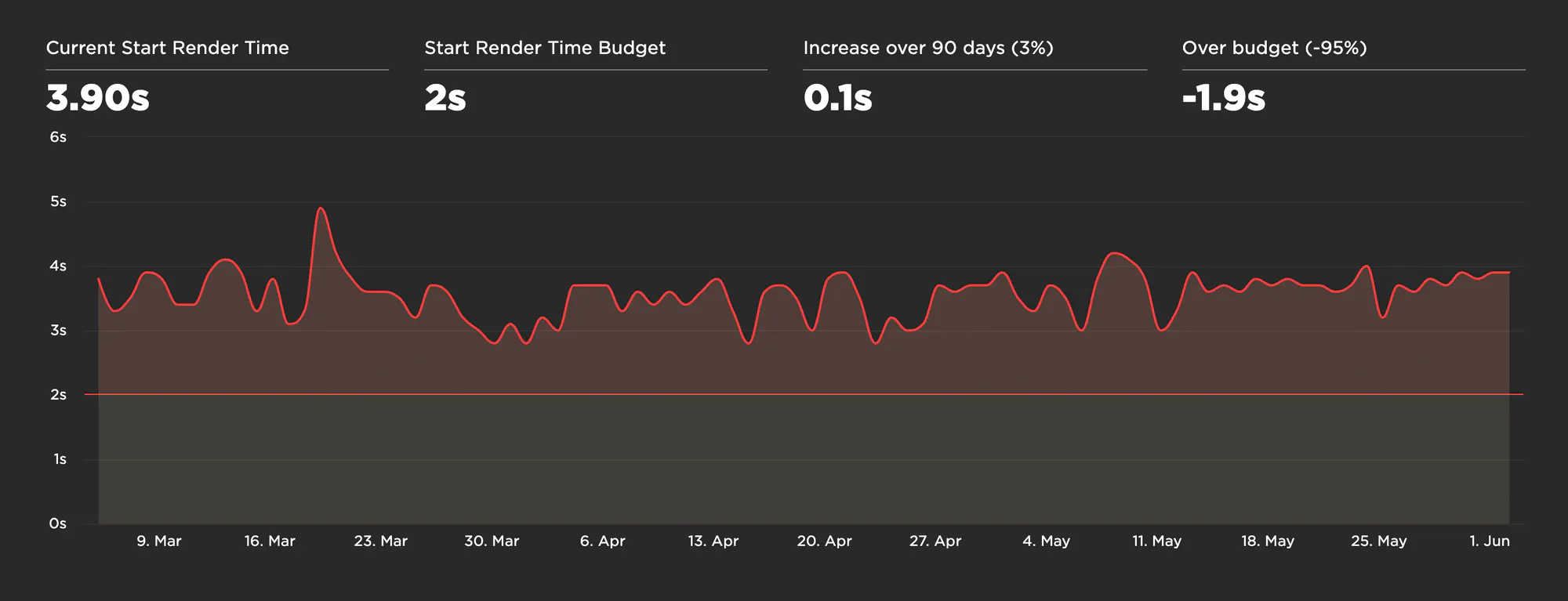

Start Render chart

Looking at the last three month's worth of Synthetic test data, we can see that the CNN home page has a Start Render time that's consistently above the 2-second threshold that we usually recommend – and that Start Render has sometimes hit almost 5 seconds.

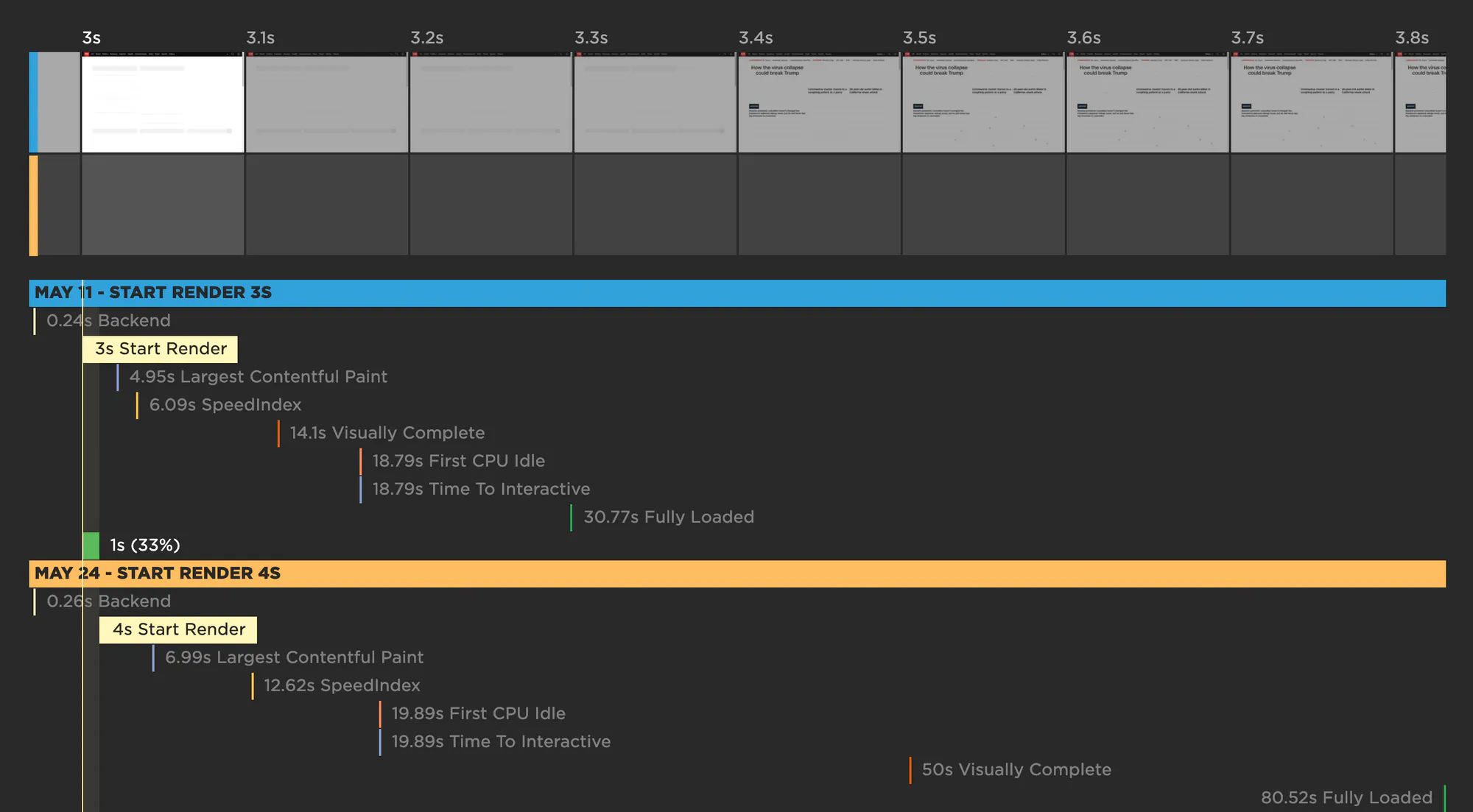

2. Let's look at some of the peaks and valleys on their time series chart to see if we can spot the issues. To start, we'll quickly bookmark these two tests:

- Monday 11 May - Start Render 3s

- Saturday 24 May - Start Render 4s

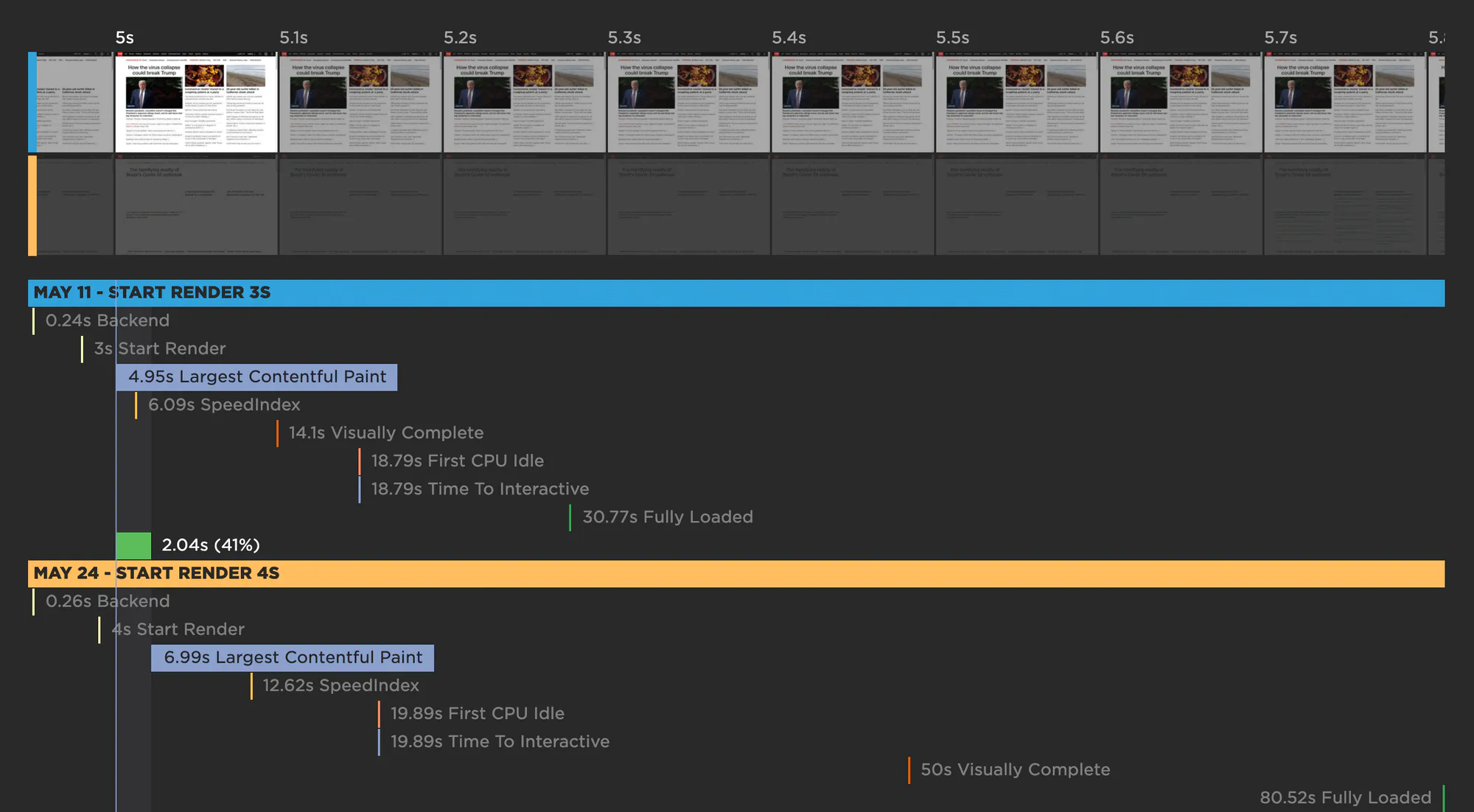

3. Now that we've selected these two tests, let's look at them side by side. Here you can see the visuals are stacked compactly so you can easily spot any differences. Hovering over any metric in the waterfall chart shows you the metric for both tests. It also shows you how the metrics align with the rendering filmstrips. For example, you can see that not much is happening in either filmstrip when Start Render fires:

Comparing two tests

For this particular page, it looks like Largest Contentful Paint is a more meaningful metric, in terms of tracking when important content has rendered, so let's look at that. Things look a bit better in the May 11 test when Largest Contentful Paint fires at 4.95 seconds. In the May 24 test, LCP lags at almost 7 seconds.

Comparing Largest Contentful Paint

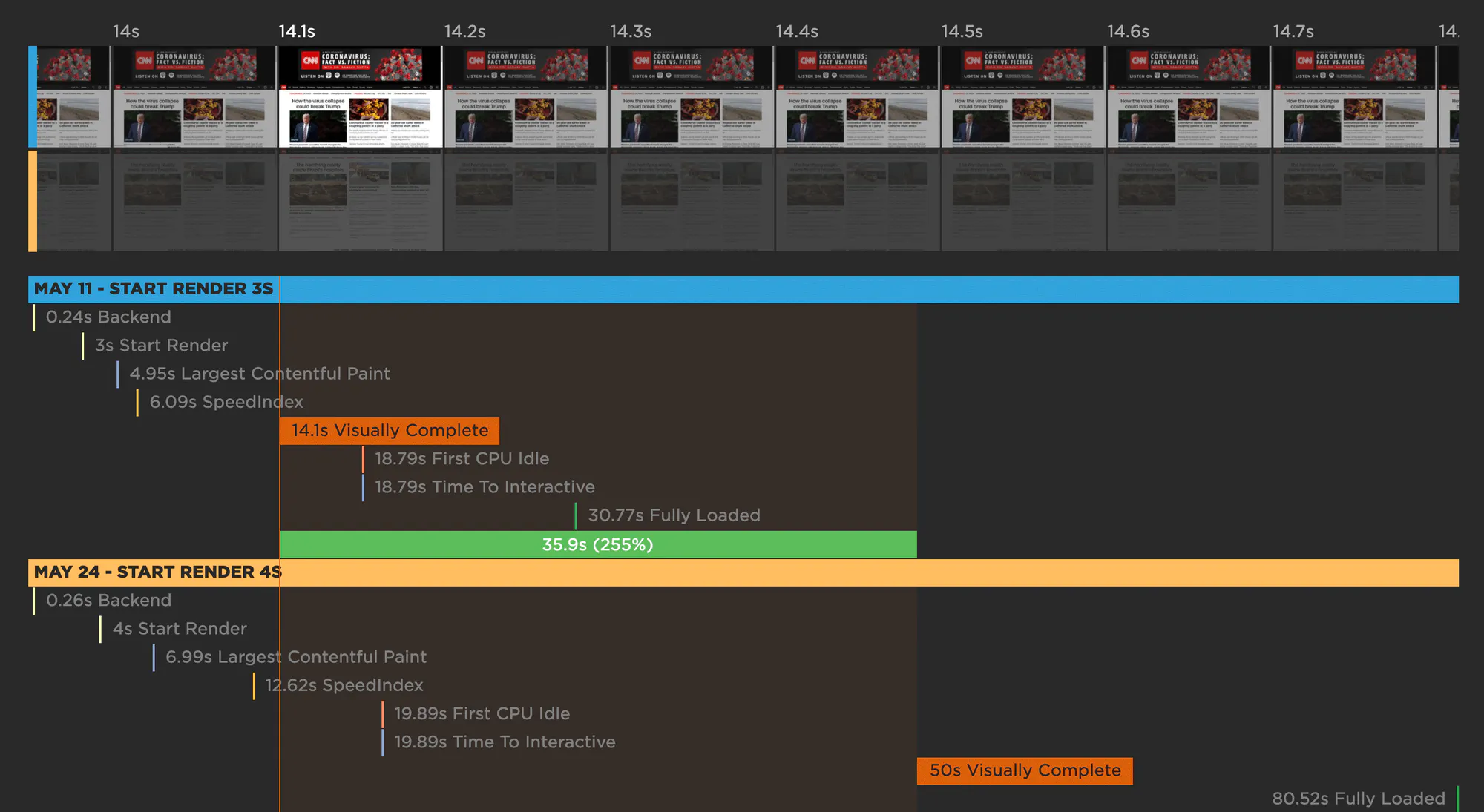

The gaps get much broader when you look at Visually Complete and Fully Loaded (below). Delays in those metrics can be an indicator that, even though the page's visible content might have rendered, the page might be janky and unpleasant to interact with.

Comparing Visual Completeness

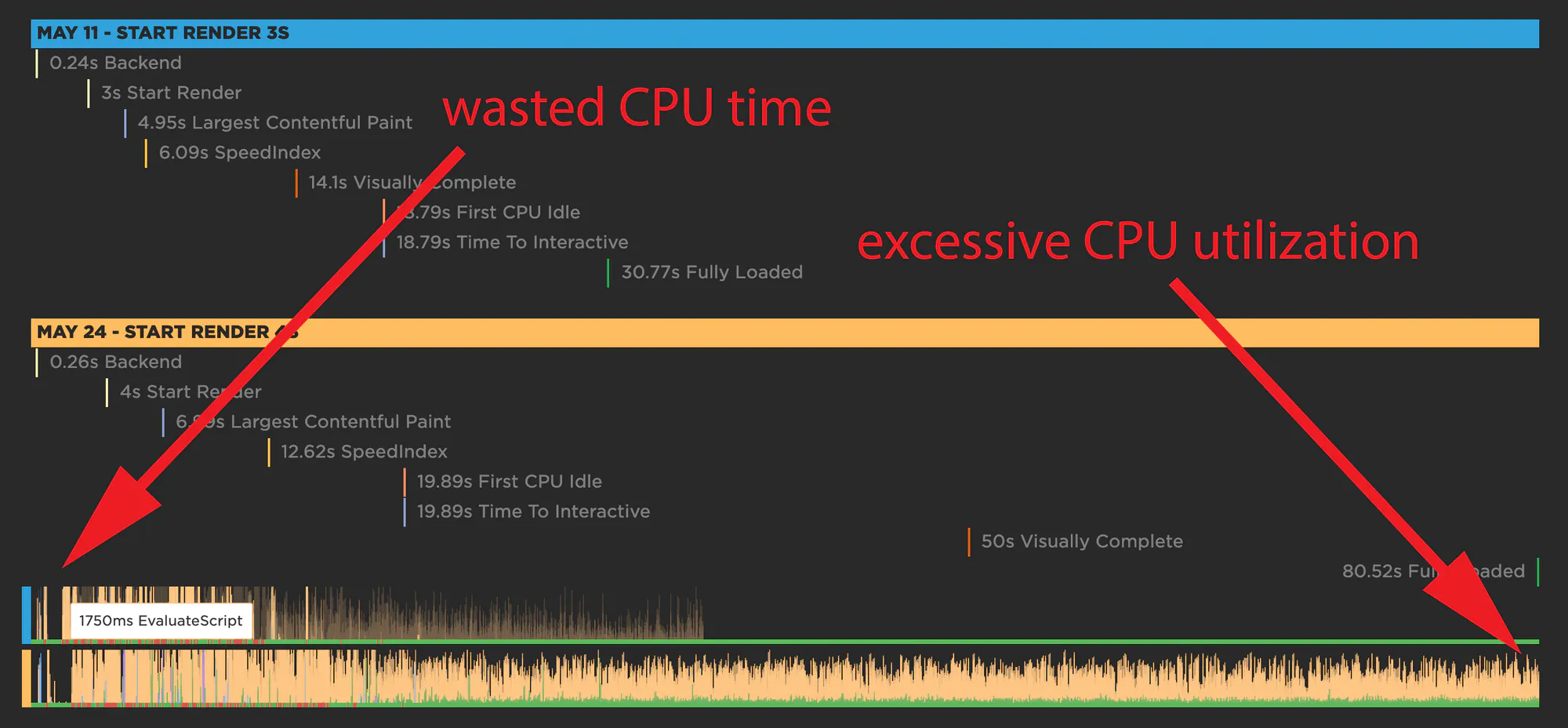

Just below the waterfalls, the CPU timelines are really telling:

Comparing CPU utilization

Ideally, you want to see a busy CPU at the start of the page render, but both tests show pockets of dead time early on. CPU utilization doesn't pick up till around 1.7 seconds for the May 11 test and 2.2 seconds for the May 24 test. This begins to explain the slow Start Render.

The CPU timeline for the May 24 test also shows that the CPU is thrashing for the entire 80-second duration that the page is rendering.

4. Based on the comparison so far, there are two questions for investigation:

- What is causing the delays in Start Render and Largest Contentful Paint? High Start Render and LCP times can give users the feeling that the page is unresponsive.

- What is causing the excessive CPU thrashing? This thrashing can give users the feeling that the page is janky.

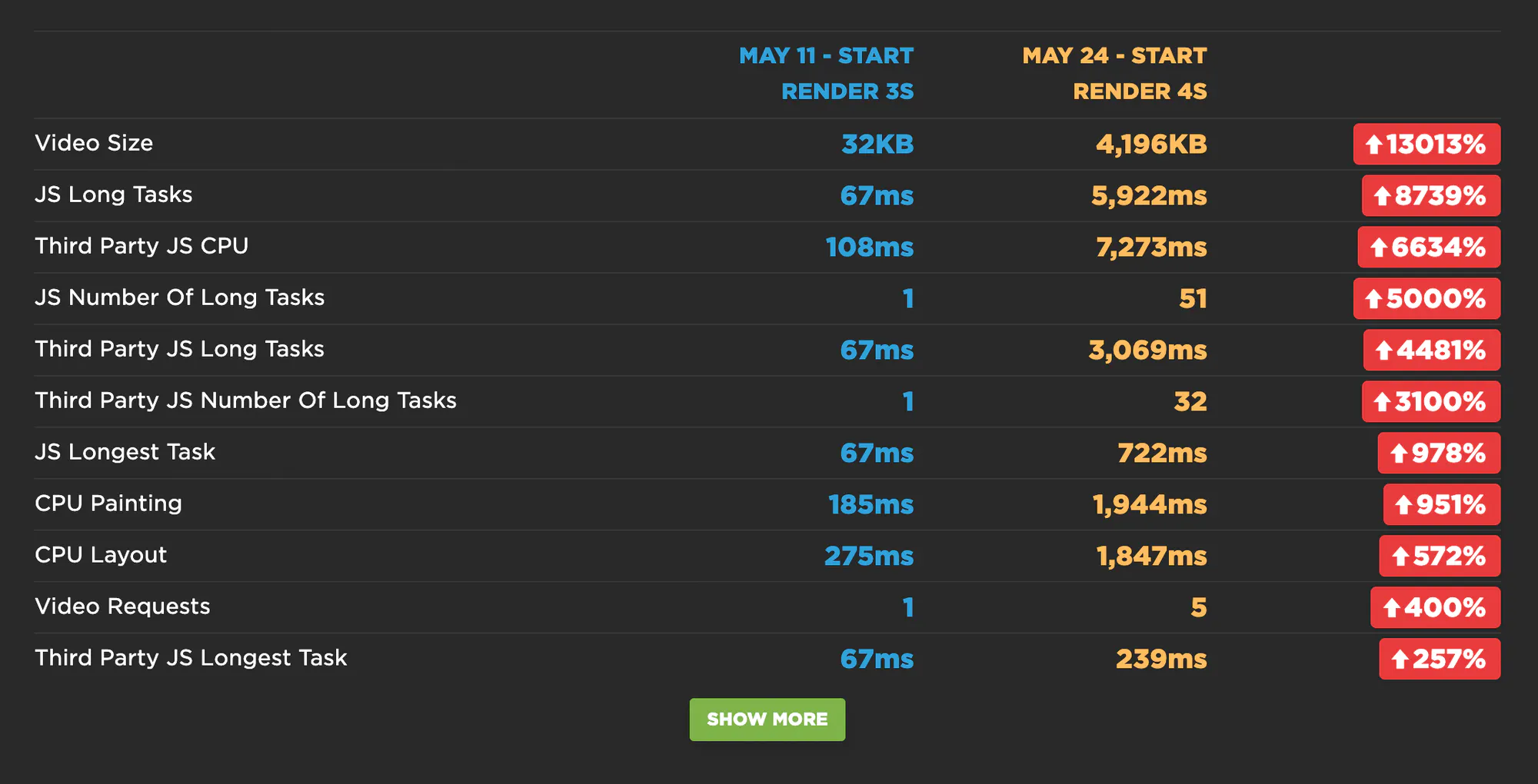

Time to dig deeper. To do that, we show you detailed metrics, along with a calculation of what got better or worse. For obvious reasons, the metrics that see the biggest changes are shown at the top of each list.

Side-by-side view of CPU metrics

Looking at this list, it's pretty clear where some of the issues lie:

- Video size has jumped from 32KB to more than 4MB. This would help explain the extreme delay Visually Complete and Fully Loaded times.

- The number of JavaScript Long Tasks has leapt from 1 to 51 – and 32 of these are third parties. The longest Long Task is 722ms.

- On the slower page, third-party JavaScript CPU time is more than 7 seconds – 3 of which are blocking the CPU.

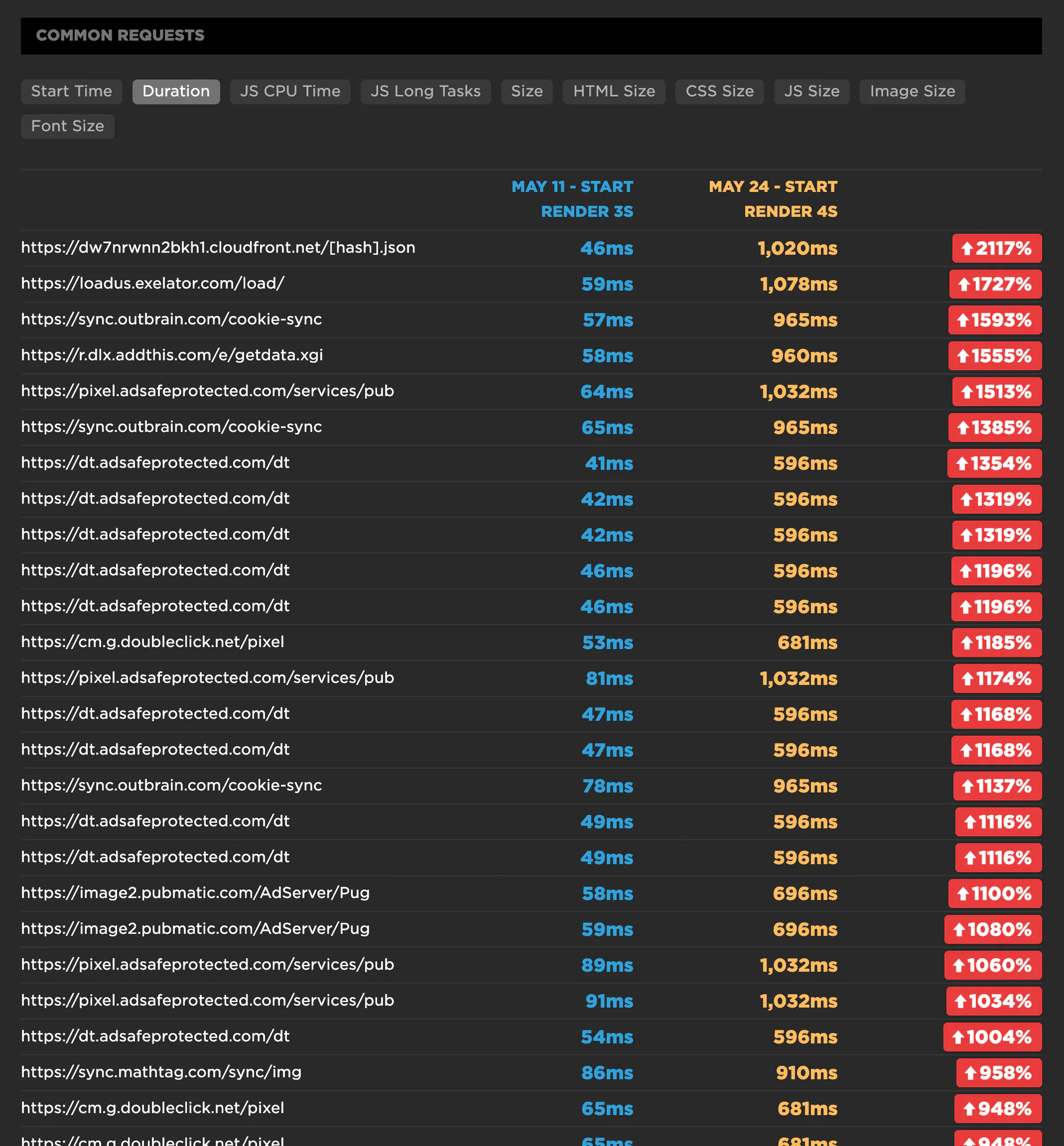

5. Now that we know that the number and complexity of JS requests is a major issue, we can find out exactly which scripts appear to be the culprits. This is a list of all the common requests that are shared across both pages, along with a calculation of how much performance degradation each request experienced:

Comparing request duration

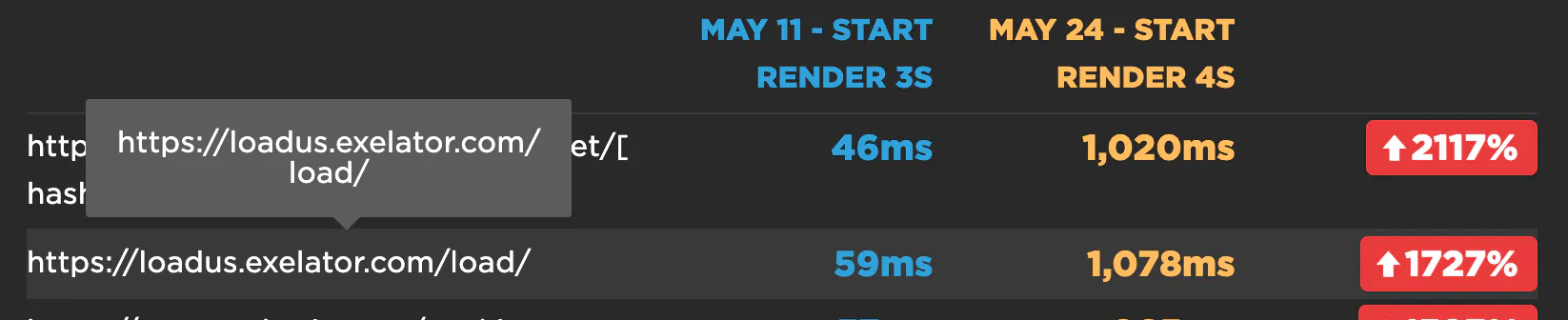

6. Now let's take a closer look at one of those scripts:

Comparing individual requests

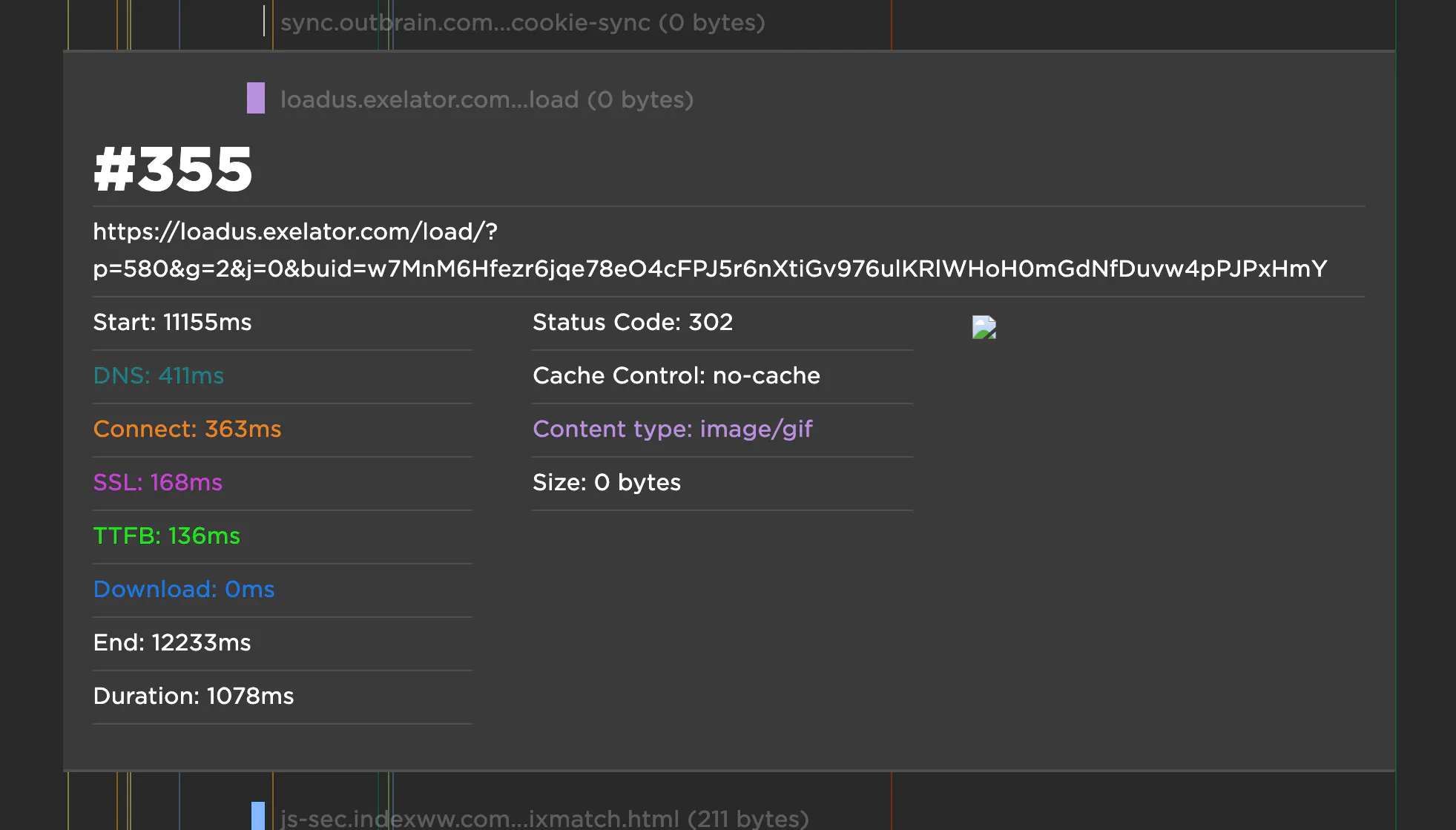

Exelator is a data collection tracker that is reportedly owned by Nielsen. Its duration has increased by 1727% – from 59ms to 1078ms. Clicking through to the full test result page for May 24 and searching for Exelator yielded four separate requests, with a total duration of more than 1600ms. Here's one of those requests:

Individual request metrics

Something that's important to note here is that three out of four of those requests had a size of 0 bytes, and the fourth was only 43 bytes. Keep this in mind next time you ponder adding an allegedly small third-party request to your pages.

7. That was just one request issue among the many listed above. To drill down further, next steps could include looking at the detailed waterfall charts for each test run – May 11 – to see what else is happening before Largest Contentful Paint and Start Render to delay those metrics.

A quick glance at the waterfalls for both tests reveals:

- 40 requests before Start Render fires on the May 11 test

- 64 requests before Start Render fires on the May 24 test

- 105 requests before First Contentful Paint fires on the May 11 test

- 144 requests before First Contentful Paint fires on the May 24 test

Those are a LOT of requests. The volume alone is enough to create performance issues. Add to that any episodic issues with serving those requests, and you can see how easily performance can degrade.

This is just the beginning of how you can analyze any two tests. Hopefully this walkthrough will get you started. As always, we welcome your feedback!

Video Walkthrough

If you'd like to watch someone else do this, here's Cliff with a three part video walkthough for you

Updated 4 months ago