Troubleshooting Lighthouse results

Issues with your Lighthouse tests or results? Here are the answers to some common questions.

Lighthouse is an open-source automated tool for auditing the quality of web pages. You can perform Lighthouse tests on any web page, and get a series of scores for performance, accessibility, SEO, and more. Every time you run a synthetic test in SpeedCurve, your Lighthouse scores appear at the top of your test results page by default.

Lighthouse scores are different from your other test results

There are a number of reasons why your Lighthouse scores in SpeedCurve might be different from the scores you see in other tools, such as Page Speed Insights. Here are a few common reasons, along with our recommendation not to focus too much on getting your scores to "match".

Lighthouse scores aren't showing up

If you're not seeing your Lighthouse scores, it could be because there are security certificate issues on the page you're testing. If that's the case, then Lighthouse won't run.

To find out what the issue is, open one of your test results and click the Lighthouse Report link below the waterfall chart:

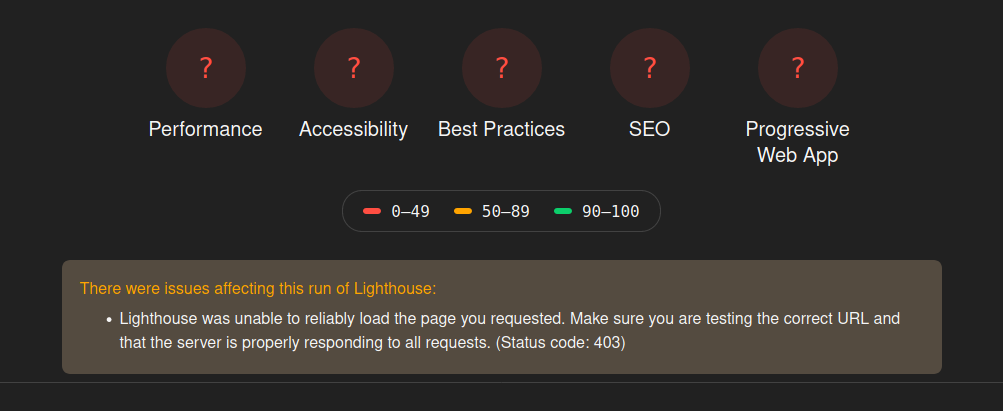

On the Lighthouse Report page, you should find some information that helps you determine why Lighthouse wasn't able to run.

A common cause of failures is Lighthouse being blocked by bot detection or DDOS protection software, resulting in a 403 error. This can be resolved by allowing web traffic with the "PTST/SpeedCurve" user-agent string.

Gaps in your charts

If you're seeing gaps in your Lighthouse charts, that means we don’t have any data for that metric as part of the test. This is a Lighthouse issue, so we don't have any control over it. When we notice more issues than normal, we pass on bug reports to the Lighthouse team.

If you're concerned about excessive gaps in your data, you can see if your issue is already being discussed in their Github repo, and then you can either ask them directly via GitHub or Twitter (@____lighthouse).

Synthetic scripts don't work in Lighthouse

Lighthouse doesn't support synthetic scripts.

Lighthouse Accessibility scores are provided "as is"

We provide the Lighthouse accessibility scores as part of the overall Lighthouse integration. Accessibility isn't our area of expertise, so we give you those Lighthouse scores and audits "as-is".

Sometimes people ask if the wcag 2.1 is being checked against. According to the Google Lighthouse Github repo, they run both wcag2a and wcag2aatags. This means that the tests check for compliance of A and AA levels.

If you have questions about your Lighthouse Accessibility score, the best option is to contact the Lighthouse team directly. You can see if your issue is already being discussed in their Github repo, and if it's not already there, post your question. Or you could ask them via Twitter (@____lighthouse).

Reporting Lighthouse issues

If you find an issue with a Lighthouse test, we recommend reporting it to the Lighthouse issue tracker.

Updated 4 months ago