Lighthouse scores and audits

How to find and track your Lighthouse scores and audits.

Lighthouse is an open-source automated tool for auditing the quality of web pages. You can perform Lighthouse tests on any web page, and get a series of scores for performance, accessibility, SEO, and more.

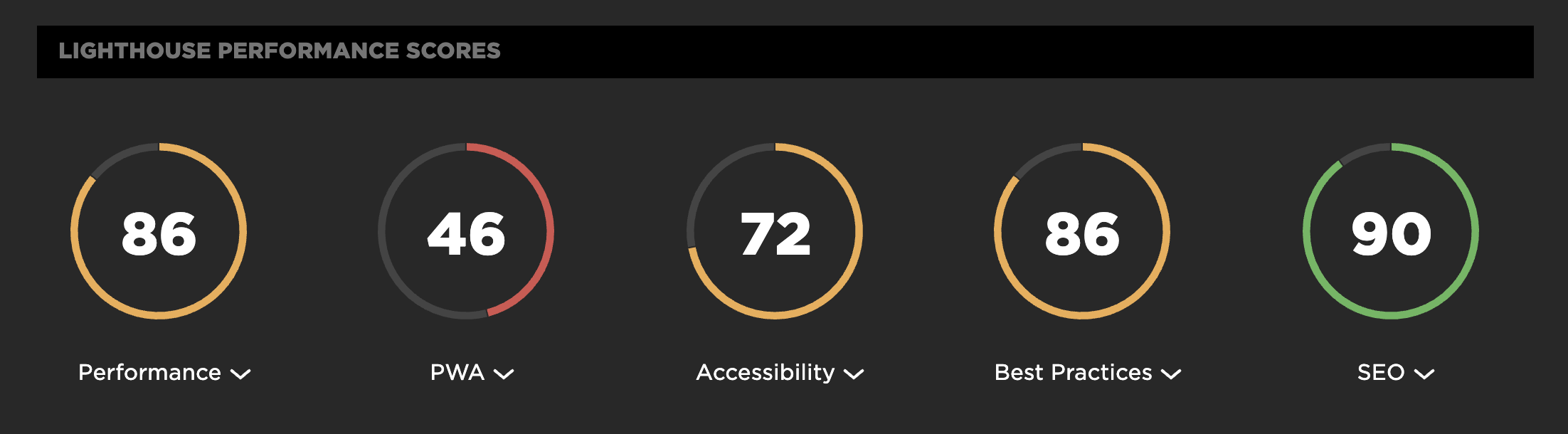

You can run Lighthouse within Chrome DevTools, from the command line, and as a Node module. But there's no need to do any of those if you're already using SpeedCurve Synthetic monitoring. Every time you run a synthetic test, your Lighthouse scores appear at the top of your test results page by default.

Get started with Lighthouse

If you're already a SpeedCurve user, you can drill down into your individual test results and find your Lighthouse scores at the top of the page.

(If you're not a SpeedCurve user, you cansign up for a free trial and check out your Lighthouse scores and the dozens of other performance and UX metrics we track for you.)

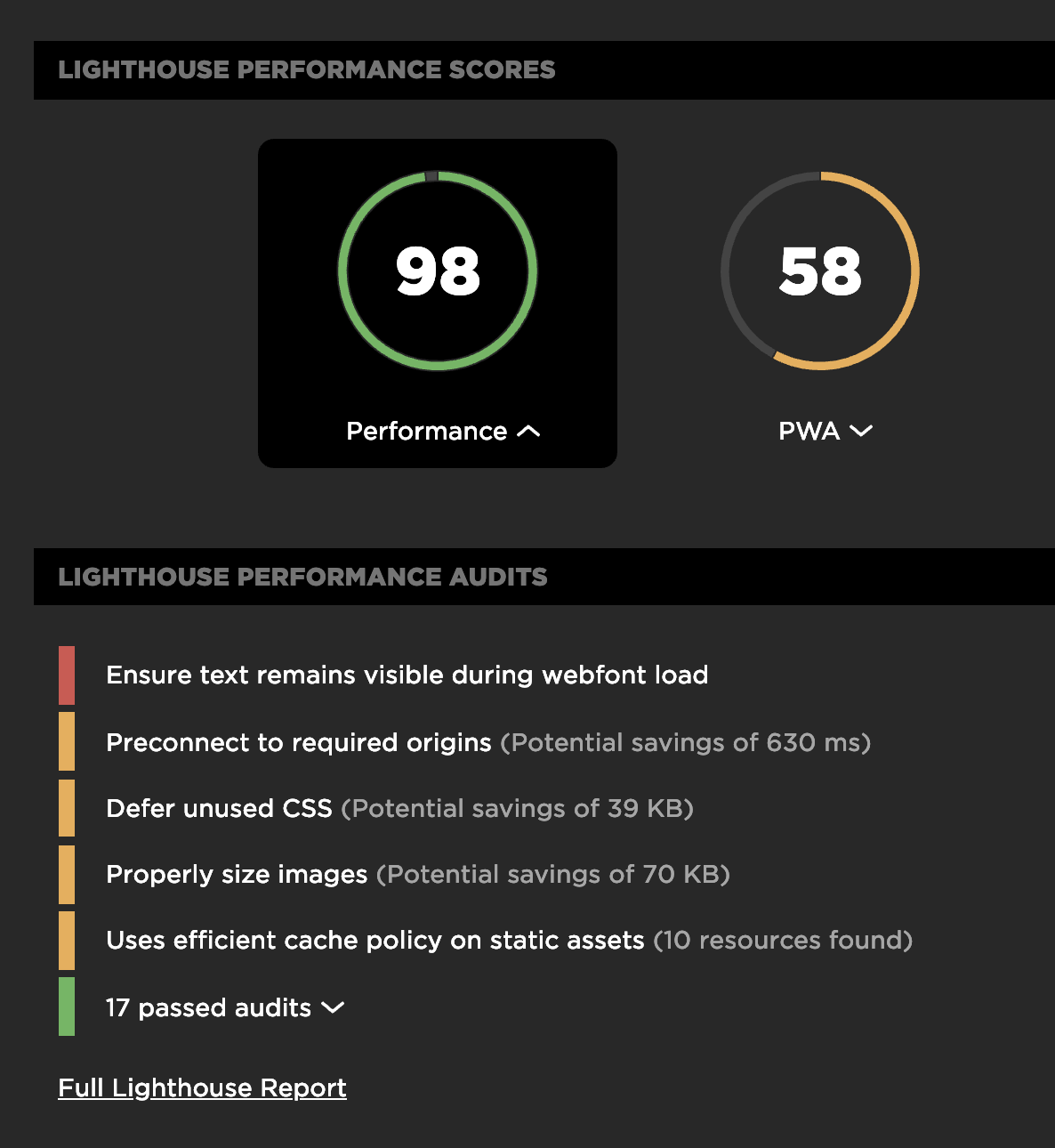

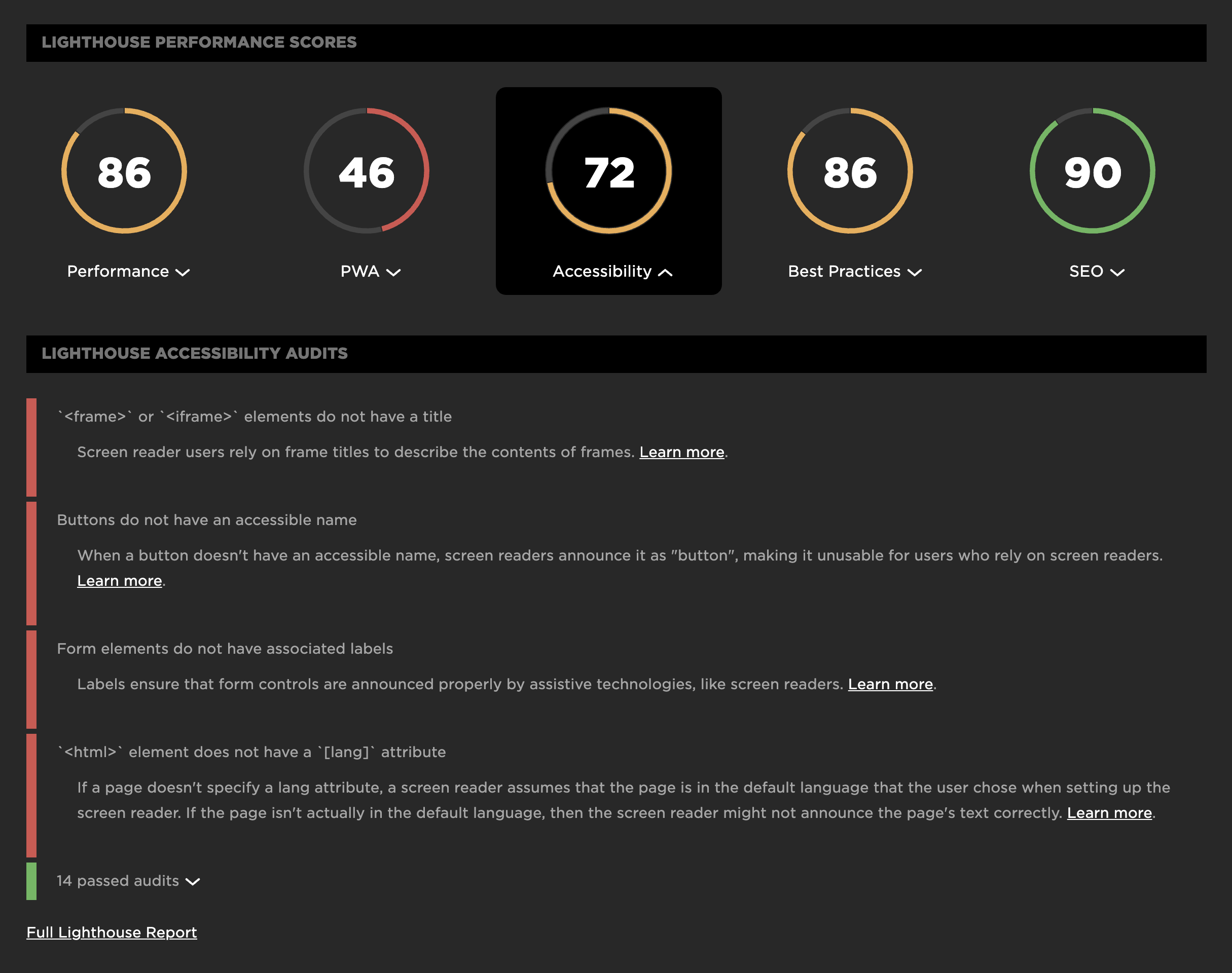

To see the full Lighthouse report, click on the score you're interested in (e.g., 'Performance'), which will open up the list of audits for that score. At the bottom of that list is a text link called 'Full Lighthouse Report', which will take you to the detailed report on the Lighthouse site.

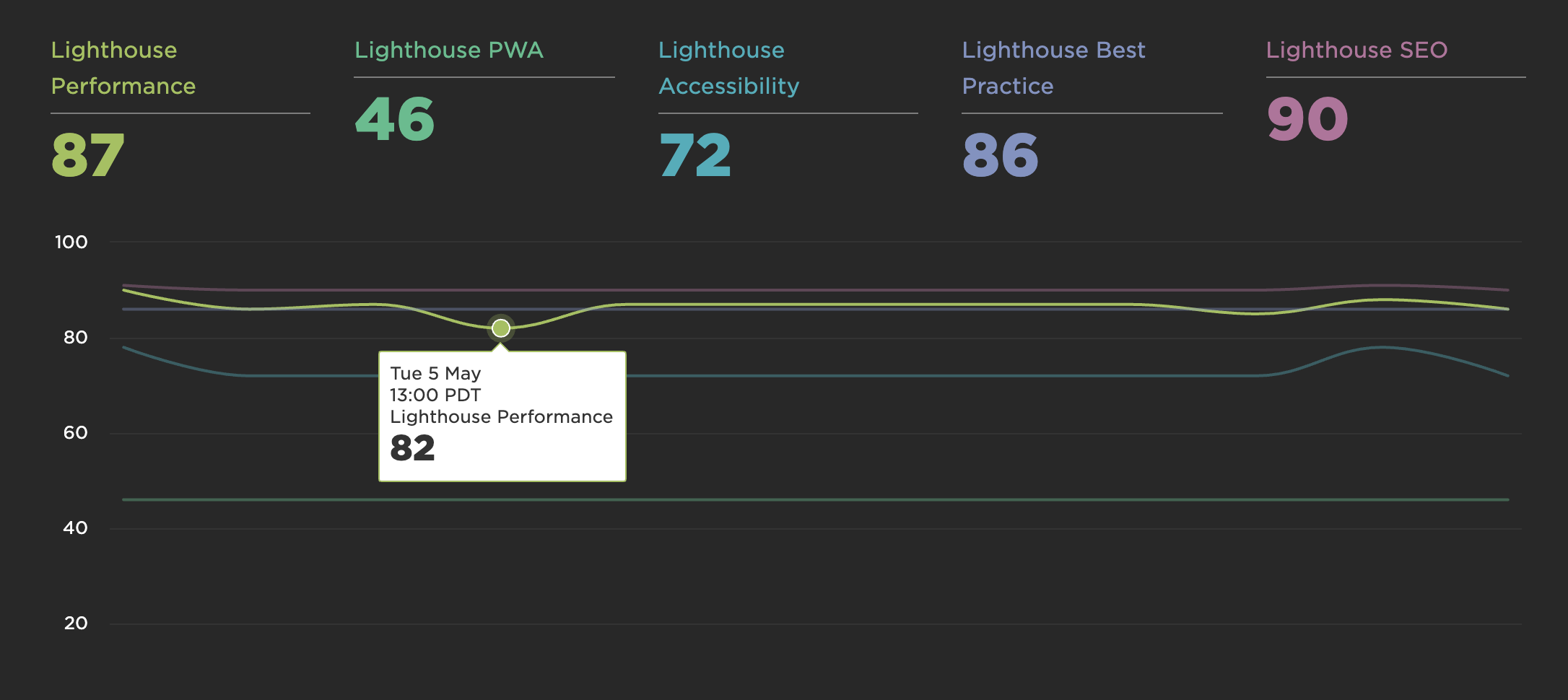

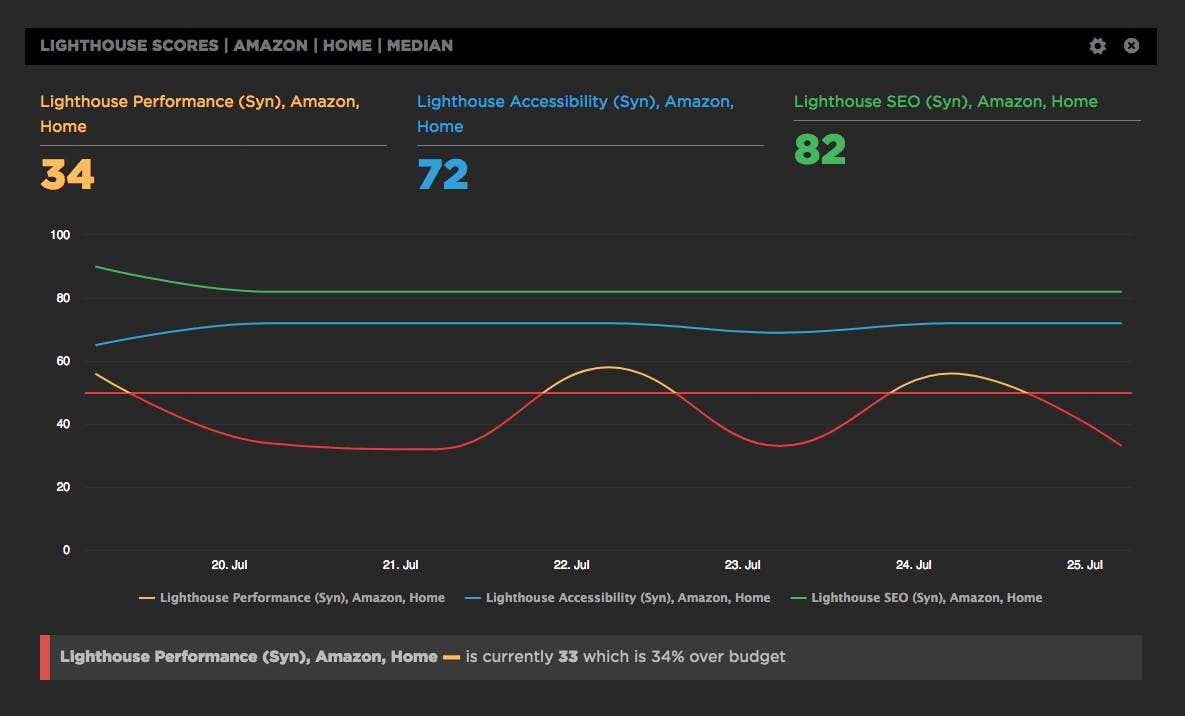

On your Synthetic Sites dashboard, you can also track your Lighthouse scores over time:

View aggregated Lighthouse scores across all your URLs

As well as being able to see Lighthouse results at the top of your individual test results, you can also see aggregated results from all of the URLs in your site. The Improve dashboard is your own performance checklist, based on Lighthouse audits aggregated across all of your URLs

Why we added Lighthouse to SpeedCurve

There were a number of compelling reasons why we made the choice to add Lighthouse to SpeedCurve:

1. Track metrics that correlate to UX

The best performance metrics are those that help you understand how people experience your site. One of the things we like about Lighthouse is that – like SpeedCurve – it tracks user-oriented metrics like Speed Index, First Meaningful Paint, and Time to Interactive.

2. See all your improvement recommendations in one place

SpeedCurve already gives you performance recommendations on your test results pages. Now you can get all your recommendations – including those for accessibility and SEO – on the same page.

3. Improve SEO, especially for mobile

Several of our customers have told us that their SEO teams are very interested in using Lighthouse to help them feel their way forward now that Google has announced that page speed is a ranking factor for mobile search.

4. Monitor unicorn metric for CEOs and executives

When Google talks, executives listen. Many of our customers have told us that their CEO or other C-level folks don't really care about individual metrics. They want a single aggregated score – a unicorn metric – that's easy to digest and to track over time.

5. Get alerts when your Lighthouse scores "fail"

One of the great things about running your Lighthouse tests within SpeedCurve is that you can use your Favorites dashboard to create custom charts that let you track each Lighthouse metric. You can also create performance budgets for the metrics you (or your executive team) care about most and get alerts when that budget goes out of bounds.

For example, the custom chart below tracks three Lighthouse scores – performance, accessibility, and SEO – for the Amazon.com home page. I've also created a (very modest) performance budget of 50 out of 100 for the Lighthouse Performance score. That budget is tracked in the same chart, and you can see that the budget has gone out of bounds a couple of times in the week since we activated Lighthouse. You can also see, interestingly, that this score has much more variability than the other scores. This would merit some deeper digging.

Lighthouse vs PageSpeed

In November 2018 Google released v5 of the PageSpeed Insights tool. It was a major change as under the hood it switched to using Lighthouse for all its audits and scoring.

In July 2018 we introduced Lighthouse audits and scores to SpeedCurve so we'll soon be deprecating and removing the old v4 PageSpeed score.

If you haven't already you should update any performance budgets to use the "Lighthouse Performance" score.

Your Lighthouse scores might be different from your other test results

There are a number of reasons why your Lighthouse scores in SpeedCurve might be different from the scores you see in other tools, such as Page Speed Insights. Here are a few common reasons, along with our recommendation not to focus too much on getting your scores to "match".

Troubleshooting Lighthouse

If you have questions or issues with your Lighthouse tests or data, here are the answers to some common questions.

Updated 9 months ago